How to configure a Docker + VS Code development environment

After working with Docker for a while on some projects, I arrived at a configuration that works well for me and my colleagues, so I decided to share it in case it’s useful to more people.

Keep in mind it’s based on my personal experience, it might not use the best practices or apply to your case. Suggestions on improvements are welcome though!

I’ll show you how to setup a full project from scratch and run it completely isolated within containers, so the environment will (hopefully) be reproducible and run the same, no matter your host OS.

I use Ubuntu as my daily driver, so this guide will be from that perspective, but you should be able to adapt it to your OS of choice without much trouble. It’ll probably work well in Windows using WSL 2.

Table of Contents

- The project

- Requirements

- Starting out

- The backend app

- The frontend app

- A simple nginx proxy

- VS Code for the win

- Addendum

- Conclusion

The project

To illustrate all steps, and be as realistic as possible, we’ll create a basic web application using the following stack:

- Backend: Python (Django)

- Database: PostgreSQL

- Frontend: VueJS (Quasar)

At the end we’ll have a consistent environment for code editing with:

- Code-completion

- Linting

- Debugging

- Well defined editor settings for everyone working on the project

The stack choice is based on what I have more familiarity, but all the concepts in this guide should apply to any stack you choose.

Requirements

We need very few things installed locally:

- Docker 19+ (a recent version)

- docker-compose v1.27+

- VS Code 1.5+

And these Code extensions:

Starting out

To make it easier for you to follow, I’ve set up a GitHub repository with all the code at https://github.com/davidrios/example-docker-project. I’ll refer to specific commits so you can see the structure along the way.

We are starting the project from scratch, so the first thing we need is to sort out its structure. This is the one I like to use:

- Project root

- some docker stuff and README

- conf

- project 1

- project 2

- misc

- …

- code

- project 1

- project 2

- …

The next thing we need is to have some basic containers running to bootstrap the projects.

We already know we need at least 3 containers from the project description: backend, frontend and

database, so we need to create a base docker-compose.yml. But before that, one of the most useful

things is to have a .env file, so you can have some amount of customization over the containers.

Let’s create the initial .env.template file, that each user will make a local copy to customize:

LOCAL_USER_ID=1000

POSTGRES_PASSWORD=password

The LOCAL_USER_ID is used to work around Docker permission issues on Linux (more on that later).

You set it with the uid of your local computer user as returned by the command id -u. You can

just ignore it if you are on Mac.

After creating my local .env copy, it’s time to define the basic docker-compose.yml:

https://github.com/davidrios/example-docker-project/blob/2aac6eb151104b205461d025ca07647c44bc5d36/docker-compose.yml

Things of note:

- I import the

.envfile at lines 3-5, and pass all defined variables as environment vars to all the other containers. You can see that at lines 10-11, 19-20, etc. - I’m using Alpine images with major and minor versions specified, as per best practices. You may want or need to use an Ubuntu/Debian/etc based image, but you should aways choose specific major/minor versions.

- There is a volume defined for the PostgreSQL data, so that is preserved between runs.

- I run all projects I can as a standard (non root) user in the containers, and I like to create volumes for the home of that user on every container that I expect to log in and execute commands, that way I can have the shell history and other stuff preserved between rebuilds. That is an optional and personal preference, you can just remove those volumes. Be aware that, while using those volumes is convenient, they may have subtle effects on the overall reproducibility of the environment.

- For the backend and frontend, since they don’t have any application configured yet, but I need them running

anyway to be able to bootstrap the apps, I just tell them to run a very long

sleep.

At this time we can navigate to the main project repository and just run:

$ docker-compose up --build

You should see some messages from PostgreSQL about the initialization of the database and the three containers should keep running.

The backend app

I like to execute the app as a regular user, and to

avoid issues of permissions on Linux, that user need to have the same uid as my local computer user,

that’s the reason for the LOCAL_USER_ID in the .env file. To do that we’ll add some helper

scripts to be used inside the container:

base.shrun.shenter.sh

We’ll add them to a directory conf/backend/scripts, as decided earlier. These scripts will only

be executed on the dev environment, so they’ll not be a part of the image.

We’ll also create a Dockerfile for the container with some needed initial customizations.

The compose file will be updated with the new image and mounts for the code and scripts. And the code

directory will also be created and docker-compose will be executed again:

Check how the project has changed:

https://github.com/davidrios/example-docker-project/commit/04ae1e95ca8dfe752e76a67fce5b9882847f2f8e

## *** stop docker-compose ***

$ mkdir code

$ docker-compose up --build

Now we have the container running and ready to create the Django project. We use the enter.sh script to

enter the container with the venv already activated and logged with the correct user. Now let’s

create the project:

## in another terminal at the root of the project folder:

## Create the database:

$ docker-compose exec -u postgres postgres-db createdb -T template0 -E utf8 backend

## Enter the backend container:

$ docker-compose exec backend /scripts/enter.sh

## Now inside the container:

$ cd /code

$ pip install --no-binary psycopg2 django psycopg2

$ django-admin startproject backend

$ cd backend

## Save the requirements:

$ pip freeze > requirements.txt

## *** Edit backend/settings.py to connect to the PostgreSQL db

using the password from the environment and the host/dbname/user

we already defined ***

$ python manage.py migrate

$ python manage.py createsuperuser

## *** insert desired login information ***

## Test the application runs:

$ python manage.py runserver

## *** Everything seems ok, stop the server and exit the container ***

Check the files added, with special attention to the database configuration:

Now we need to set the application to run automatically with the container and to expose the port so we can access with our local browser. The installation of app packages will also be added to the image.

To be able to run locally on any port we choose and not conflict with other running

stuff, we’ll add that option to the .env.template file and the same line to our .env:

APP_PORT=8000

and update the docker stuff. Check the diff:

https://github.com/davidrios/example-docker-project/commit/c9276155e56f1f8c0168bb81902e5e0f22ed0dad

Now stop docker-compose and run it again, always with --build. The application should now be running

and accessible at http://localhost:8000 (or other port if you changed it).

This is it for now for the backend. VS Code configuration instructions will follow later in the guide.

The frontend app

This one is pretty similar to the backend. We’ll create the same three helper scripts, with specific changes for the new environment, and customize the image a bit.

At this time we’ll also add a .dockerignore file, so that Docker doesn’t

have to copy useless stuff everytime it has to build an image. That’ll speed up the

process a lot if you already have a big project.

Take a look at the changes:

https://github.com/davidrios/example-docker-project/commit/bbb2c6180437c8e1ae5b3b0ca2121e78dc1250af

## *** We stop docker-compose and start it again ***

$ docker-compose up --build

We bootstrap the Quasar project the same way:

$ docker-compose exec frontend /scripts/enter.sh

$ yarn global add @quasar/cli

$ cd /code

$ ~/.yarn/bin/quasar create frontend

## *** choose quasar options ***

## Pick at least ESLint because it's used later.

## I'll also pick TypeScript to demonstrate the great VS Code support later

## And finally choose an ESLint preset you wanna use on your project. I'll stick with standard here

## Let Quasar install the packages with yarn and get out of the container

Now we’ll configure the frontend container to start Quasar in dev mode automatically and export the port so that we can access it.

Here’s the resulting changes:

https://github.com/davidrios/example-docker-project/commit/73e3b55df164ea2e1477cb51a8d591299c5f4643

Note that we just moved the export port from the backend to the frontend container, we’ll fix that in a bit.

## *** We stop docker-compose and start it again ***

$ docker-compose up --build

The Quasar app should now be running and accessible at http://localhost:8000 (or other port if you changed it).

A simple nginx proxy

To make our lives easier, we’ll create a simple but flexible nginx proxy in front of everything. We need to use a custom config file, and for maximum flexibility we’ll process the config and interpolate with the env vars before starting the server.

These are the resulting changes:

https://github.com/davidrios/example-docker-project/commit/b07c83199fd8d4b1cd4dfce1e830a19e76b64543

We can now access the frontend app at http://localhost:8000, the Django admin at

http://localhost:8000/admin and nginx will also proxy all requests to /api* to

the backend app.

VS Code for the win

Now that we have our whole project well organized and running isolated from our local machine, we need to start coding it. Wouldn’t it be awesome if we could have the same consistent and pleasant experience while coding as well? That’s when VS Code comes to the rescue!

We’ll use the remote containers extension to run VS Code directly inside the app containers, so it’ll have access to the environment exactly as it runs and we can install extensions without affecting our local installation.

We will be using a directory named code/vscode to put related stuff, just so

it is already available inside the containers because of the /code mount.

You could put it in another directory, but then you would have to setup another

mount for each container.

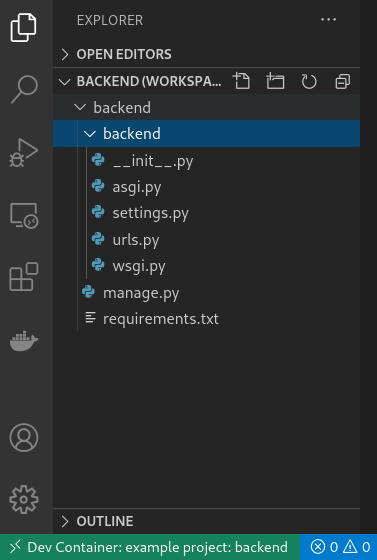

Configuring for the backend

First create the directory code/vscode/backend and add the following files:

backend.code-workspace: Replace backend with the real nome of your project, so it’s easier to pick the Code windows apart when navigating.flake8.ini: Where we put the linter settings for Python. We’ll be using flake8 instead of pylint, since it is much faster and I like it better :)..devcontainer.json: Tells Code how to setup the container instance

It’s also necessary to edit the container base.sh script, so it installs necessary

packages after any rebuild and also to start Python in debug mode. As a nice touch

we’ll also configure the server to autoreload when any file changes using

Watchdog, since the Code debugger extension for

Python doesn’t suport Django’s autoreload. As a bonus, watchdog uses much less CPU than

Django’s default autoreloader.

I’ve gone ahead and added our first test API.

Check it out:

https://github.com/davidrios/example-docker-project/commit/33cb5d775b88d8b953ba690c17e1359dd5fa34a5

Now all we have to do is stop compose and run it again, all should be running and ready.

To start working with the code we open a new Code window (Ctrl+Shift+N) and go to

File > Open Folder and open the <PROJECT>/code/vscode/backend directory. Right

away a notification should appear, you just click the Reopen in Container button.

You can also open the command palette (Ctrl+Shift+P) and type reopen in container

and pick Remote-Containers: Reopen in container. Then, it’ll show a notification

that it’s installing the Code server and after a few moments it should open the

project, and there will be an indication at the status bar that you are inside the

container, like this:

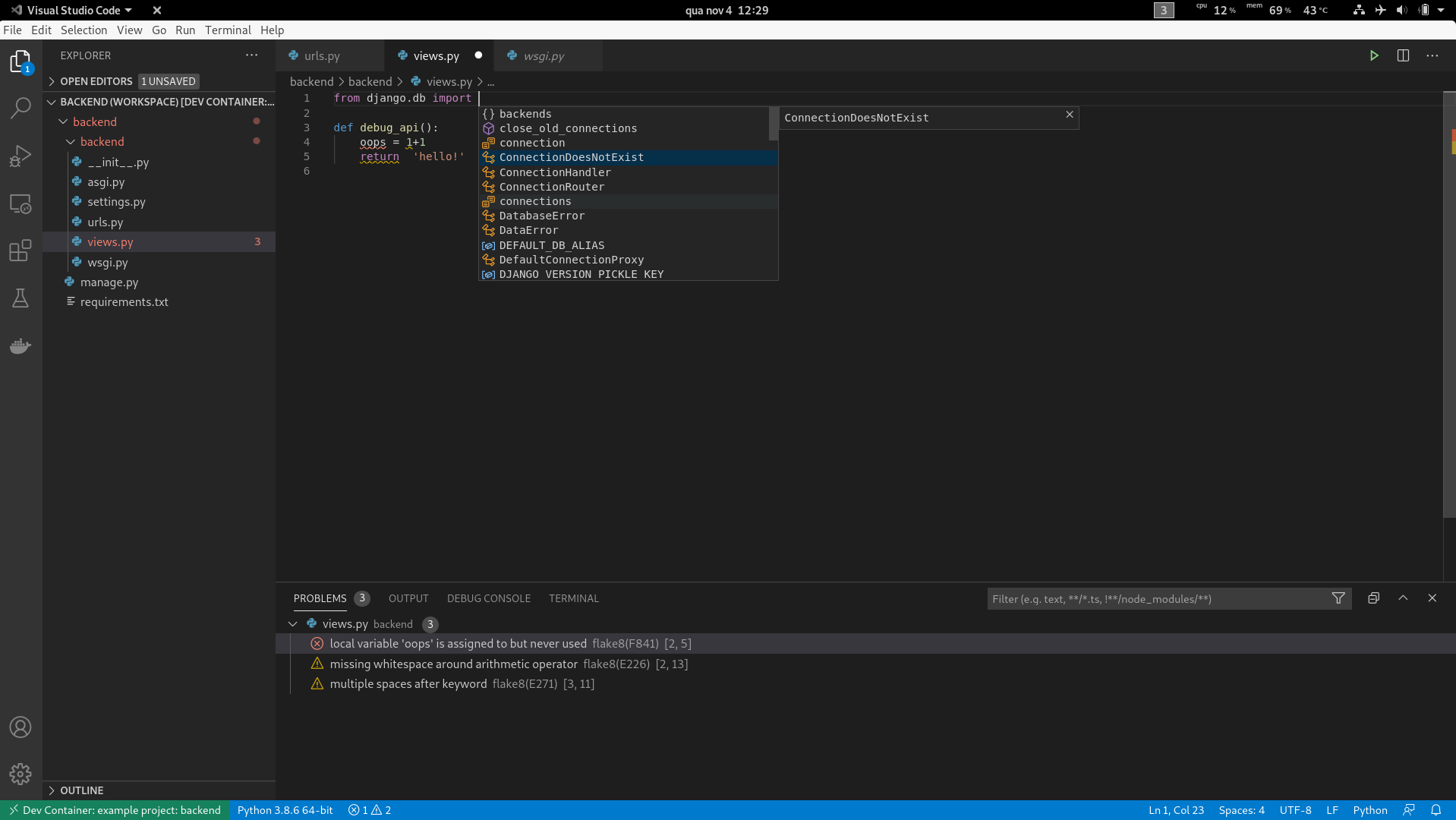

Then you will have all editor goodies, like this:

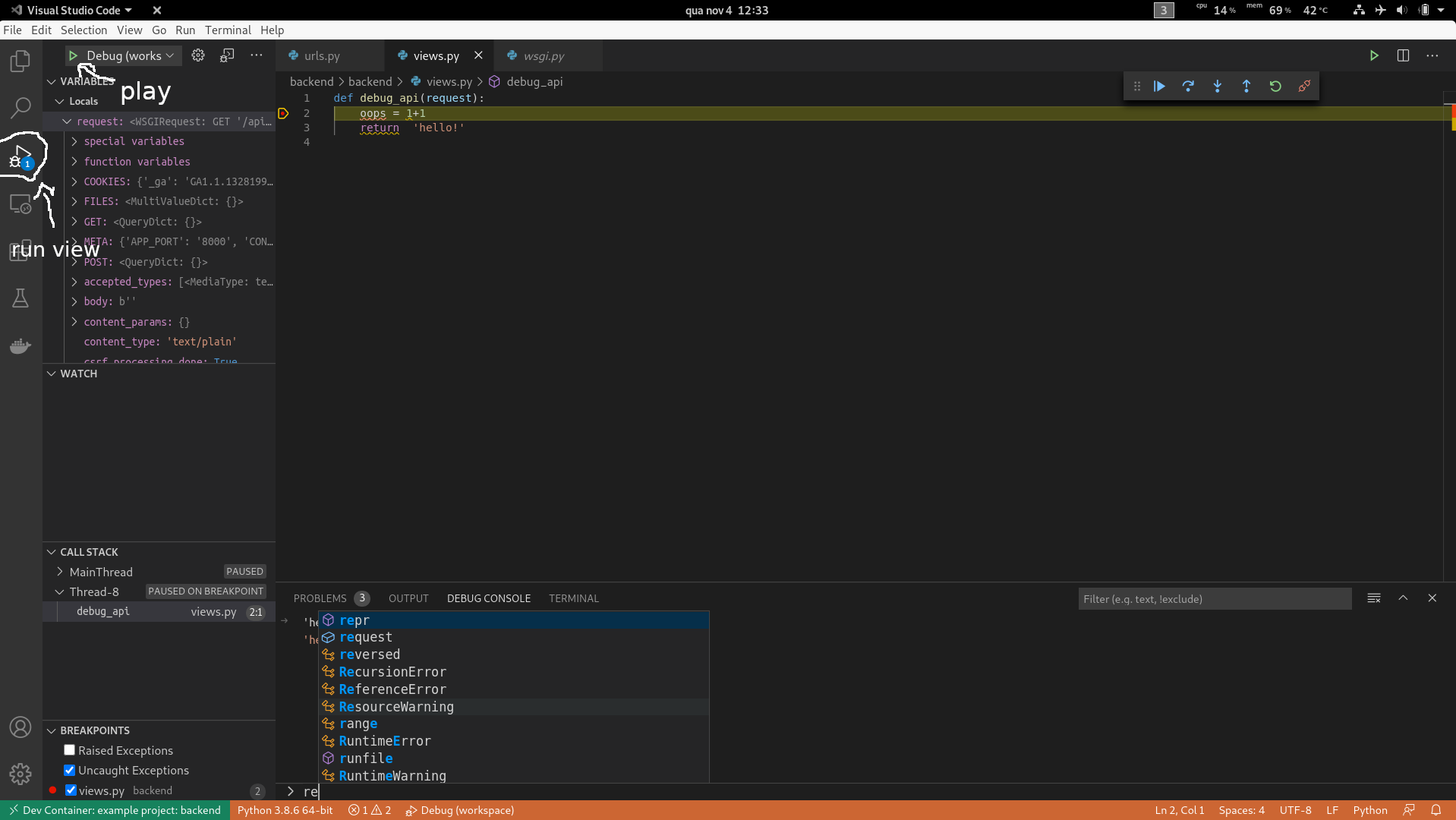

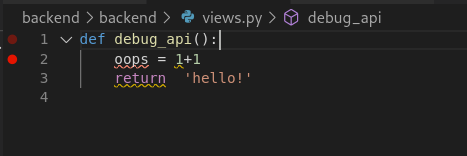

And to debug, you just click the gutter to add a breakpoint, or press F9 at the

desired line, like this:

Then go to the Run view clicking at the side, and click the little green “play” button.

The status bar should turn to orange to indicate the debugger is connected. If you have

a breakpoint, like I did here, and navigate to the new API in the browser, you will be

greeted with glorious debugging goodness, like this:

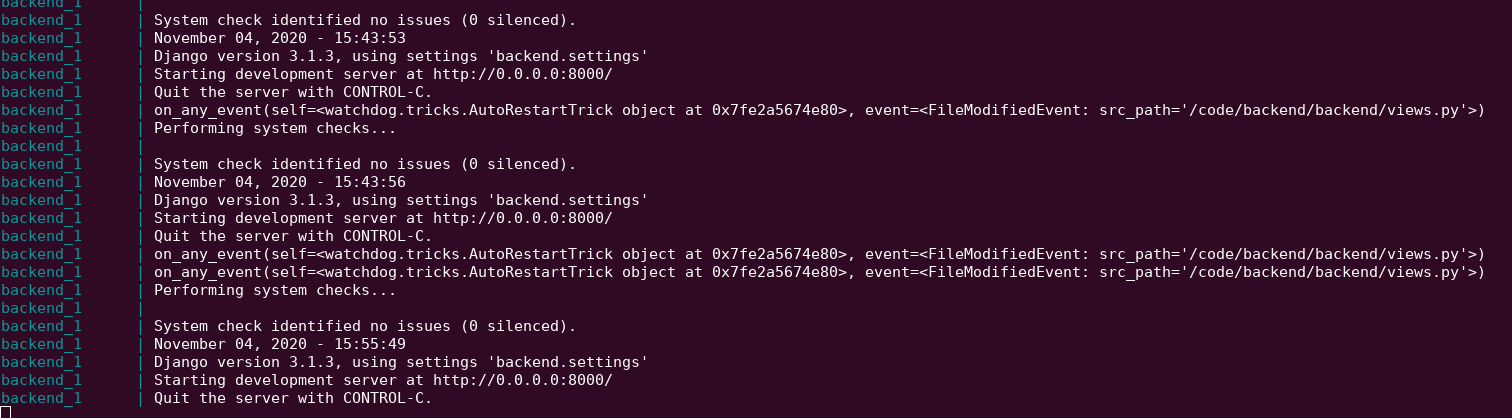

Each time you change some file the app will also reload, like this:

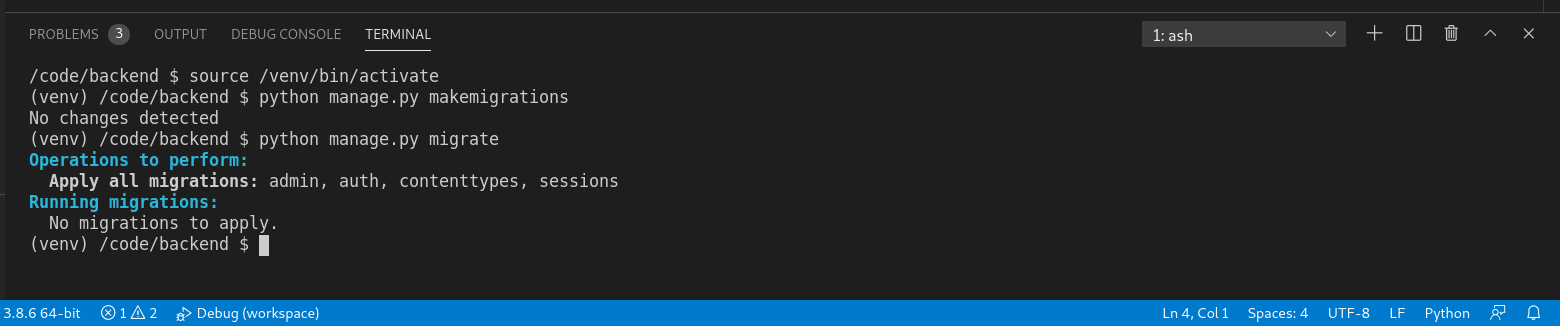

And if you need to run any Django administration commands, you can do so easily with the

integrated terminal. Just open the menu Terminal > New Terminal (Ctrl+Shift+<BACKTICK>),

like this:

If you find that the terminal did not have the venv activated, just click the trash icon

to kill it and open it up again.

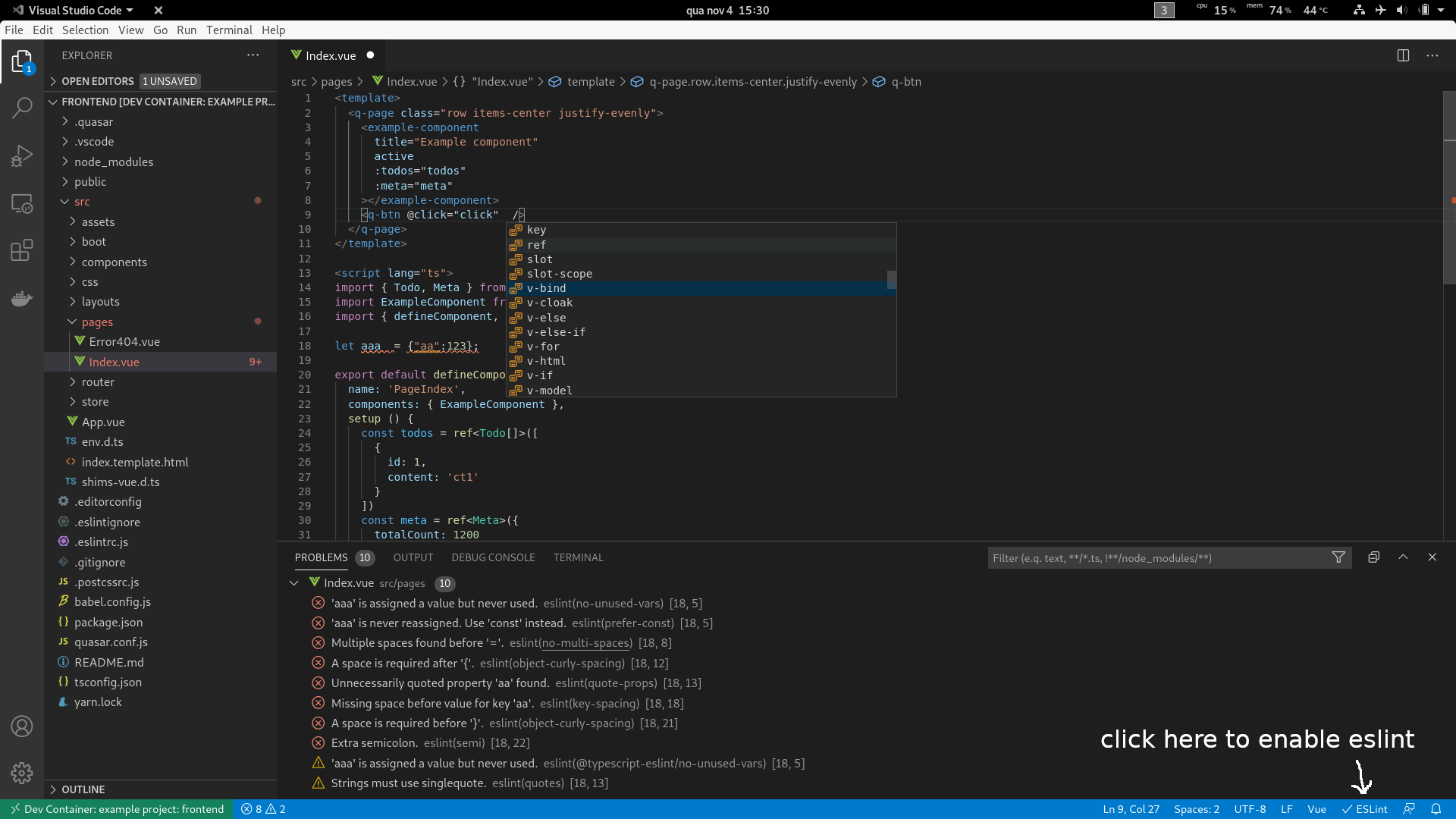

Configuring for the frontend

The configuration here is similar to the above. First create the directory

code/vscode/frontend and add the file .devcontainer.json. I’m not configuring a project

file here because there’s no other folder besides the frontend project and to also

use the settings files Quasar already creates when initializing the project. You could

very well move everything to a project file just like we did with the backend.

Here’re the results:

https://github.com/davidrios/example-docker-project/commit/644b7547322d903f7e4b33454b787e5d1d4c6f49

You don’t even need to rerun docker-compose, just open a new Code window, open the

directory code/vscode/frontend and click the Reload in Container button. After opening

the project, click the ESLint button at the status bar and click to allow when the

dialog appears. No you will have a fully configured editor, like so:

You get even autocomplete for things like Quasar components!

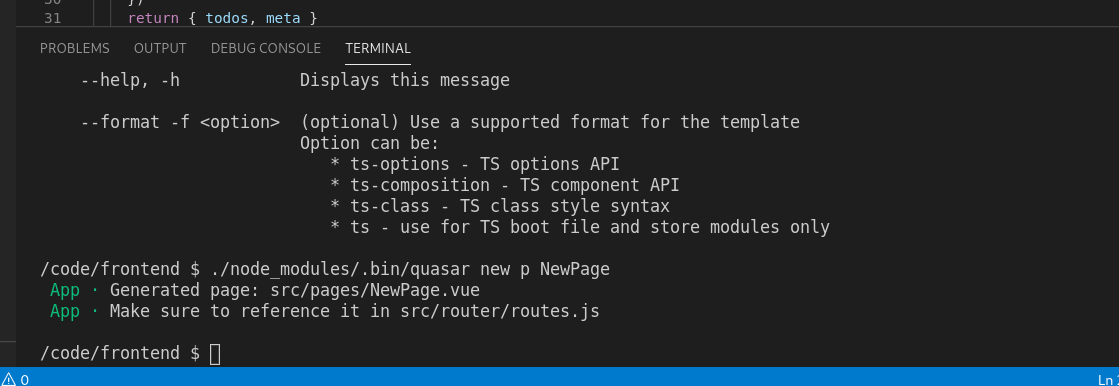

If you wand to use the quasar-cli to do things like create a component, you just open the

inbuilt terminal clicking on menu Terminal > New Terminal (Ctrl+Shift+<BACKTICK>), like

this:

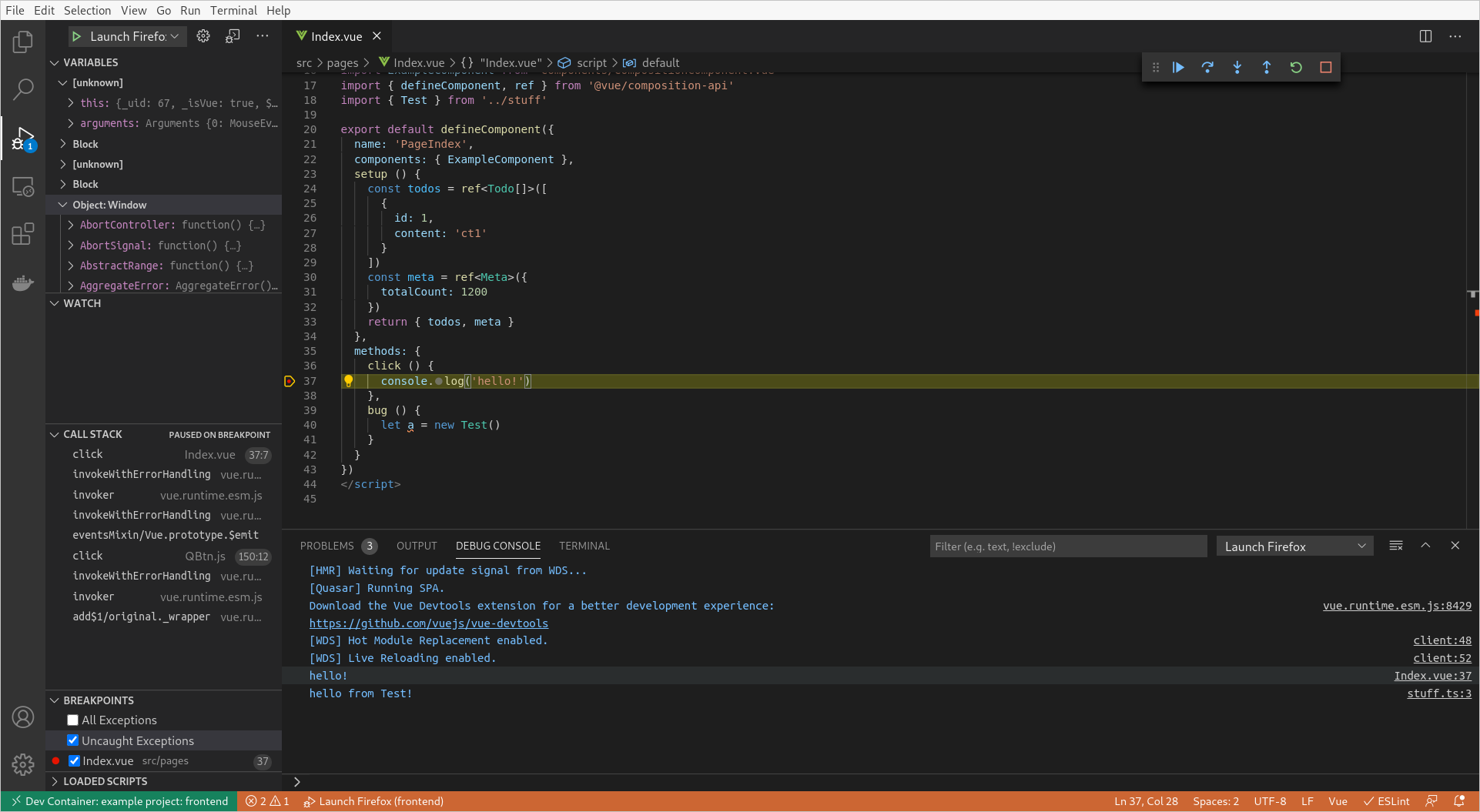

Now its time to debug!

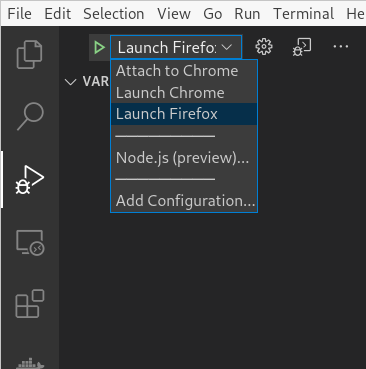

To do that, we create a launch.json with debug tasks for VS Code. This is not so trivial for some

reasons:

- VS Code has two Chrome debuggers, one newer that ships with it and the old one you can install from the marketplace. The problem is the newer is better, but for some reason, and I suspect it has to do with the remote container, it can’t launch Chrome instances. The old one can launch instances, but is not so good. So to solve our problems we can use both!

- Since we have a custom port defined in our

.envfile, we need some way to pass that to the debug task. Unfortunately the debug extensions runs at the local editor, not the remote one, so they don’t have access to env vars. To solve that I added a little prompt when running the task that asks which port the app is running at, with the default from the env template for convenience.

The added tasks file:

We have three tasks which you will use depending on your browser:

Attach to Chrome: This task uses the newer debugger to attach to a running Chrome instance with remote debugging enabled, and you don’t need to install anything else. Check here how to run Chrome with remote debugging enabledLaunch Chrome: This one launches an instance of Chrome with remote debugging already enabled and using a new profile, so it doesn’t affect your personal one. To use this task you need to have the Debugger for Chrome extension installed.Launch Firefox: And this launches an instance (or attaches to one if running) of Firefox with remote debugging enabled and also using a new profile. To use this task you need to have the Debugger for Firefox extension installed.

There’s one more thing we can do to make our lives better while debugging. The default

webpack config doesn’t generate detailed sourcemaps, which makes debugging a pain and

pretty much impossible to set breakpoints in transpiled files. We fix that by adding

devtool: 'eval-source-map' to its settings.

Even then, for transpiled files like .vue files, it generates a lot of variants of

the same file with different hash names for each of the intermediate results, which is

kind of annoying, so we fix that too by customizing the output.devtoolModuleFilenameTemplate

function.

Since this project is in Quasar, we do that the way its supposed to, by editing

quasar.conf.js.

It ends up looking like this:

https://github.com/davidrios/example-docker-project/commit/1208e30bd280179df0780add22430af3a54c6c30

Now you can run the debugger by going to the Run view, selecting a task and clicking “play”:

Try adding some console.logs and/or setting a breakpoint, running debug mode and

using the app, then you should see something like this:

Addendum

I’m not a huge fan of doing too much inside the code editor, especially things which are much more flexible to do in the terminal, that’s why I don’t use the Code Docker extension to manage/run docker-compose for example. I also think it works better that way when you have a project with several containers with different apps, like in this guide.

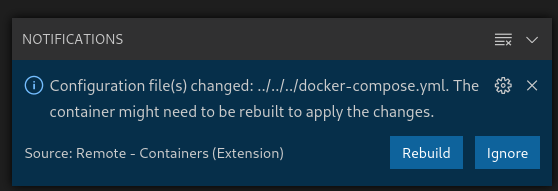

Since we are managing the compose flow manually, sometimes VS Code can show you this notification:

I recommend you to just click Ignore. If you need to rebuild things, just stop the

compose running at the terminal and execute docker-compose up --build again.

Conclusion

There are a lot of things to improve and different choices you could make for the various pieces, these are the ones I made and that worked the best for me.

Do note that this whole configuration is better suited to individuals and small teams, there are other, often mutually-exclusive, requirements for larger teams.

This is all very development focused, so the container images are not real ones that you can use to deploy, but that is doable with a little more work.

Thanks for reading!